Thanks to some excellent recent posts by Gabriella Gonzalez I've been taking another look at bidirectional type checking, this time with the help of Dunfield & Krishnaswamy's well-written introductory and survey papers on the subject. To all of the above authors, my most hearty thanks, as I had a chance to discuss using these techniques in anger this week with folks who know a lot more about the subject than I do. If you're curious how modern type inference works, I recommend these introductions unreservedly.

When I first learned about Hindley-Milner type inference, I was struck by a few things: first, I'd just spent a semester learning first-order mathematical logic, and the inference rules were identical to the ones I'd just learned. Second, the type inference algorithm looked just like logic programming only without backtracking search. This bugged me for the longest time, but of course it turns out for this style of polymorphic type checking, you simply don't need search – you always get a most general type or you get a type error.

Bidirectional Typing: Dealing with more complicated type systems

As soon as the type system gets a little more complicated, though, it's easy to lose that "always the right answer" guarantee, and these days relatively few general-purpose programming languages try. Instead, when the going gets tough, we ask programmers to add explicit type annotations (which pure Hindley-Milner typing lacks) to their program. And it turns out even in languages that do allow variants of Hindley-Milner inference (like 90's versions of Haskell), well-placed type annotations in code provide useful signposts, and even if they aren't necessary to type inference they are incredibly useful for readers.

Dunfield & Krishnaswamy describe bidirectional type checking as a way for the language designer to systematically decide where type annotations should be required. Broadly, types for some program constructs should be inferred by looking at the code itself; when the going gets tough, types can be checked based on contextual information and explicit type annotations. In their survey, they lay out some criteria for making those choices. The first three criteria together basically say that the rules should fit together to check all the programs you want to check, without requiring unnecessary typing rules.

The fourth and last criterion is the tricky one: You shouldn't need too many or overly verbose annotations, and you should be able to predict where the annotations will belong (which shouldn't change wildly as the program evolves). They give some rules of thumb:

- Introduction rules should check an input type.

- Elimination rules should synthesize an output type.

- For other rules, make use of available information as much as possible. If the preconditions / subexpressions of a rule can be formed by pulling apart an input type, make it a checking rule. If there's a subexpression type that provides complete information about all the types in play, infer that and use it to check everything else and infer the result type.

This all comes together in the let rule (the rule for let x = a in b), which is the most interesting rule in Hindley-Milner type inference, and frustratingly isn't treated in detail in the D&K introductory paper. In Hindley-Milner, the let rule performs generalization, allowing us to write expressions like (let id = λx. x in ...) and assign id its most general type, ∀𝛼. 𝛼→𝛼. This generalization step is only performed for expressions bound in let bindings. In the lambda calculus (let x = a in b) is exactly the function application ((λx.b) a), but Hindley-Milner type inference will generalize a in the former but not in the latter.

How does bidirectional typechecking handle ((λx.b) a)? For a function application the output type and the argument type are fully determined by the type of the function being applied, so we try to infer the type of (λx.b). In the simple system in the tutorial, we require a type annotation on this function, since we can only check its type (indeed, the only places we ever need annotations are immediately surrounding a lambda like this). With a bit more extension, we can infer a type for this function without annotation. But we can't infer a polymorphic type for a. Indeed, if b requires x to be polymorphic, type checking b will fail – we'll use b at two different types (for example, if a were (λy. y), and we called (x 5) and (x true) in b, we'd be told that x can't take both an int and a bool as an input.

So instead we treat (let x = a in b) differently, attempting to infer a type for a, then using that type for x in b. But that still leaves open a question: are we checking or inferring the type of b? If we want the minimum number of typing rules, we should pick one or the other. But neither choice is perfect: If b is something we could easily infer, and we require it to be checked, we'll be adding obvious type annotations to our program. If b can't be inferred, but we had context we could have checked against, requiring an extra annotation in our program is unfortunate.

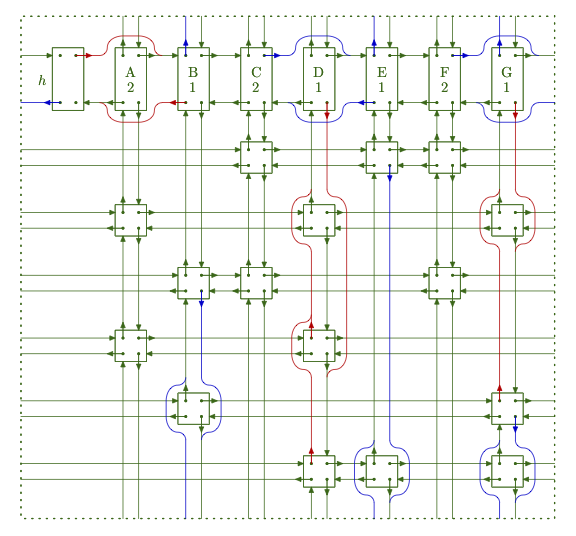

Part of the problem here, I think, is conceiving of bidirectional type checking as two functions:

I find it clearer to think of it as a single function:

That is, if the last argument is a type, we're checking; otherwise we're inferring. In either case we return the expression type, or we fail.

With this signature, I propose a slightly different set of rules of thumb from those proposed by D&K:

- Rules with no precondition (such as integer constants), or only contextual sanity checks (such as the variable rule which just looks up a variable in the type environment), always synthesize.

- Rules for which one more more preconditions mention exactly the output type should work as both inference and checking rules if the remaining preconditions make that possible (it should be easy to write this down as a single bidirectional rule, where the presence of an input type says that we're checking, and otherwise we're inferring).

- Otherwise, follow the other heuristics.

The addition of bidirectional rules is a way to keep the system compact while minimizing annotation burden, and it should still be obvious where to annotate (we shouldn't need to annotate such a bidirectional expression, but we might choose to do so – for example, we can imagine adding if to our language, and we can propagate bidirectionally to both the then and else branches, but if both require annotation we might prefer to annotate the if expression as a whole.

Or use search?

We could imagine an alternate universe where we can treat ((λx.b) a) similarly to (let x = a in b). Imagine we start by trying to infer a type for (λx.b), but fail due to the need for a type annotation. At this point we could try to make progress by instead inferring a type T for a. Now we can infer a type for b under the assumption that x:T. The tutorial introduction to bidirectional typing with inference essentially does the same thing in reverse for application, propagating the function type to operands using special rules.

But notice that we're now into full logic programming: we try one typing rule, and if that fails we backtrack and try again with a different rule whose structure of inference and checking is completely different. Is this a good idea?

- It lets us successfully type check more programs, or equivalently to type check some programs with strictly fewer type annotations than originally required.

- But it makes every function application a two-way branch point, so that the time to type check our program is exponential in the depth of function applications. We can work around this somewhat by making repeated application (f a b c d) pick a direction, but in general this concern has kept search from wide use for common language constructs like function application and let binding.

- Search is also viewed as leading to surprising behavior, where seemingly innocuous program changes (like adding an additional line of code) lead a previously checkable program to no longer type check.

That said, there is still be a useful role for search in providing better type errors. Imagine that, having encountered an error on our primary type checking path, we try some of the alternative paths. If any of these successfully type the program, they allow us tell the user what type annotations they can add to their program to type check.

Unspeakable types

It's worth mentioning one big problem with type annotations, and that's what I like to refer to as unspeakable types (as Nick Benton pointed out to me, they're actually called non-denotable types; I'll stick with unspeakable here as it's evocative). In a polymorphic type system like Hindley-Milner, if we're inside a polymorphic function we can't actually write down the type of any value that mentions one of the polymorphic types, unless we have some way of naming those types and talking about them. These types are unspeakable. To work around unspeakability we need a form of type annotation that not only says "This expression has thus-and-such a polymorphic type", but introduces a bunch of new types in its body. So, for example, I'd like to be able to write ((λx. (x : 𝛼)) : ∀𝛼. 𝛼→𝛼) and have the inner 𝛼 refer to the outer polymorphic type. In most presentations (including the D&K papers) this is not the case, and it looks like the typing rules to allow it can get hairy pretty fast: you need to start distinguishing syntactic types from the actual types you're inferring, and you need to translate between the two.

Unspeakable types don't just come up due to polymorphism – in Rust, for example, the type of a particular lambda expression is unspeakable, and the best you can do is some supertype of that lambda (this is a big problem if the type of your lambda expression ends up instantiating a polymorphic type variable of another type); this is one of the big places the impl Trait notation gets used a lot, meaning roughly "a specific type I can't or don't want to speak, but it's a subtype of this type". The documentation link above talks about "types that only the compiler knows" – unspeakable.

The lesson I'd like to take away from this is "don't make types in your system unspeakable", or perhaps "never require a type annotation in a context where there might be an unspeakable type". But in practice it isn't necessarily that easy, as Rust's example shows.